We’re fascinated by machine learning and artificial intelligence, so we thought it would be a great idea to use our knowledge to create a practical application.

And this is how Abby, our smart health assistant, was born.

In this short video, there are quite a few things happening on the backend. From face recognition to natural language processing and text to speech as well as speech to text.

Here is a technical peek into how we made this happen.

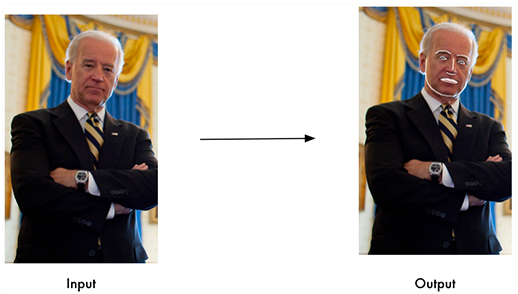

Step 1: Identify who is interacting with Abby via advanced face detection and recognition

When a participant steps in front of the camera, our face recognition system kicks in after detecting 50 frames (approximately 1 second, for a camera that has 60 frames per second). After that, we gather information about the face and compare it to what’s in our database.

If the face is in our system of known identities we can personalize the experience to this user. In our video, it knows that the user is Chet, so it pulls up all of the information regarding Chet stored in the database.

We used two technologies to make this happen: OpenCV and Face Recognition package for Python. OpenCV is an open-source computer vision and machine learning software library and Face Recognition is a deep learning framework on Python.

OpenCV allows us to detect when a face comes into the camera view, while Face Recognition for Python allows us to run models on the face.

The Face Recognition API allows us to profile the face from multiple angles. It checks for up to nine unique features on the face and accounts for the angle and rotation of the face. This lets us detect faces under multiple conditions, even if the person is not staring directly into the camera.

Step 2: Convert the user’s verbal response into text (in real-time)

The Abby App prompts a question about the user’s health, which the user says in a natural voice. In order to get this response to our machine learning model, we have to convert it into text first.

We used https://w3c.github.io/speech-api/, a great speech to text web API. Under the hood, this API uses Google’s Speech API. We feed in the audio response into the API and get an almost always accurate text response. We’re impressed by the accuracy.

Once we get the text we feed it into our natural language processing (NLP) model.

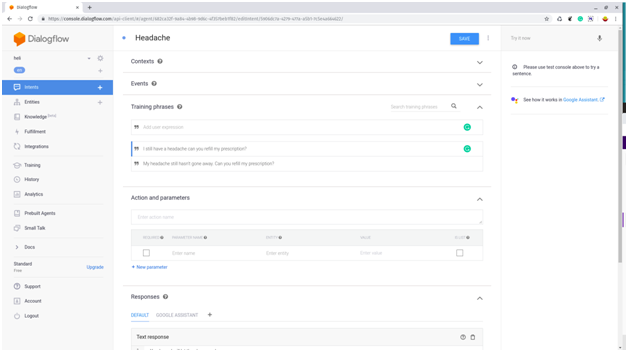

Step 3: Use natural language processing to understand the question and produce a personalized response

Once we convert the speech into text, we feed it into Google Dialogflow to help us better understand the question and produce a specific response.

Dialogflow allows us to understand the question even if it wasn’t asked exactly the way we expected. It gives us the ability to understand several variations of a question regardless of how it was asked.

From there, we train the system on how to respond based on several factors—like age—or information about the user that we gathered from their documented health history. We can also gather information from their Fitbit and Apple Watch devices.

So, anytime Chet gets in front of the camera to speak to Abby, he gets personalized advice.

Step 4: Convert the response text into speech

Now comes the fun part: making Abby speak.

To do this we use a speech synthesis API from Google that can be used natively within Chrome browsers.

Here is a block of code where we convert text into speech:

Step 5: Animate Abby while she is speaking

We actually used an animated gif that is on in the background. She is activated once the machine has to speak. The front-end of the application is javascript heavy to call the relevant APIs.

Computer Vision + Machine Learning makes for a powerful application

We truly believe this technology can make a huge impact on the world. A health assistant is one application of many that can be utilized. If you’re looking to use this type of tech for your next project, feel free to reach out to us and we’ll help you create something that makes a difference!

Let’s talk!

We’d love to hear what you are working on. Drop us a note here andwe’ll get back to you within 24 hours

You might also like

Stay ahead in tech with Sunflower Lab’s curated blogs, sorted by technology type. From AI to Digital Products, explore cutting-edge developments in our insightful, categorized collection. Dive in and stay informed about the ever-evolving digital landscape with Sunflower Lab.